Trusting Your Data: Solving the Quality Crisis in Modern Infrastructure

TL;DR: The Reliability Roadmap

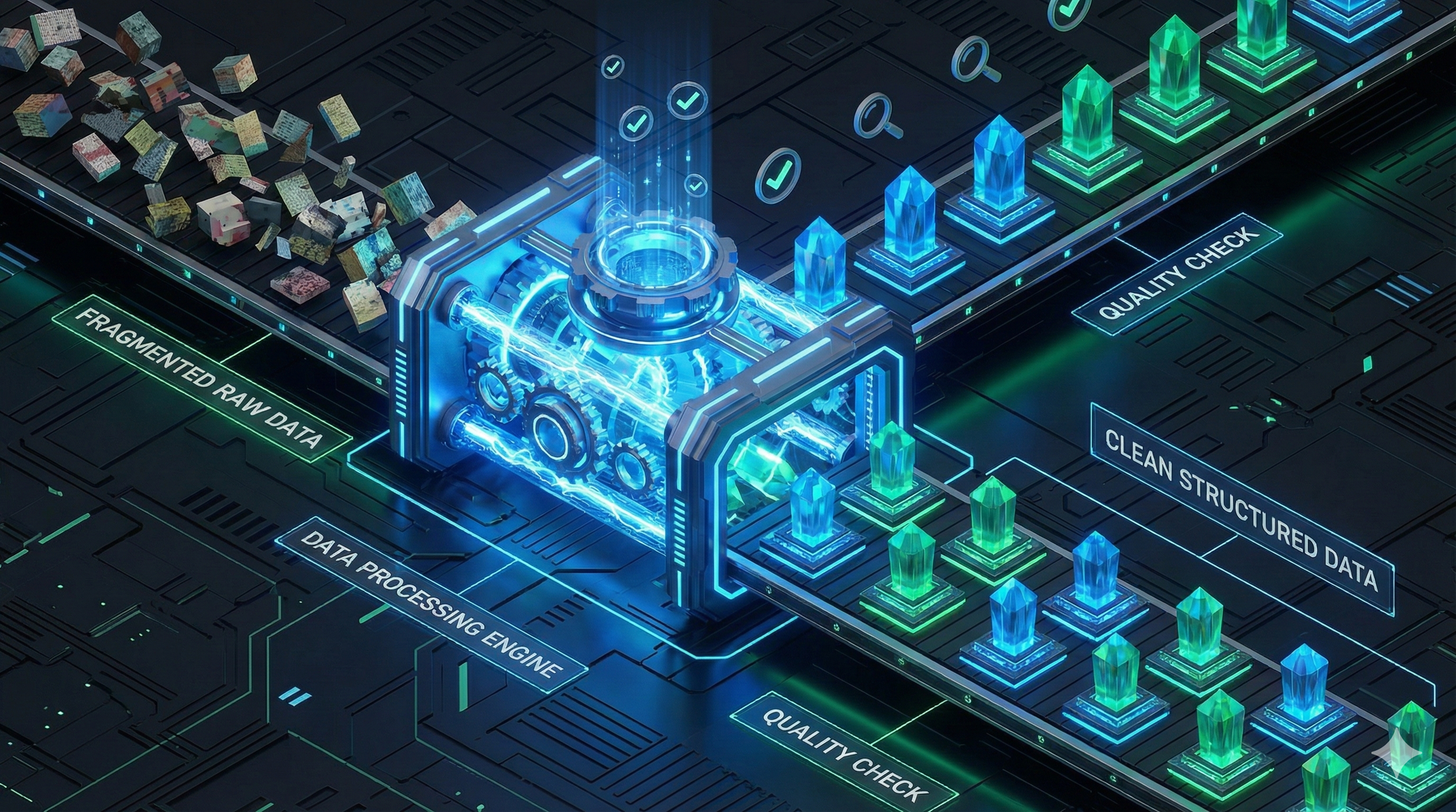

- The Problem: Bad data in = Bad decisions out. Even with great security, if your data is duplicate, null, or outdated, it’s a liability.

- The Solution: An integrated Data Quality (DQ) framework.

- The Strategy:

- Profiling: Understanding the "health" of your data before you use it.

- Automated Expectations: Setting "rules" (e.g., this column should never be null).

- Integrated Governance: Using Apache Atlas to track where quality issues start.

- The Big Win: Moving from "I hope this report is right" to "I know this data is accurate."

The Silent Killer: Why "Good" Security Isn't Enough

Most IT professionals spend their time worrying about Access Control (who can see the data). But as I’ve progressed in my journey, I’ve realized that a perfectly secured database is useless if the data inside it is wrong.

Duplicates, missing values, and "schema drift" are the silent killers of IT projects. If your AI model or your CEO's dashboard is fed poor-quality data, the resulting damage is often worse than a minor security breach—it leads to expensive, strategic mistakes.

Building a Quality Framework in CDP

In the Cloudera Data Platform (CDP), we don't just "check" data; we build it into the lifecycle. Here is how I’m approaching the quality layer:

1. Data Profiling (The Health Check)

Before a single row is moved, we use the Data Catalog to profile the source. This gives us the "Pulse" of the data:

- What is the percentage of nulls?

- Are the values within a realistic range?

- Are there unexpected patterns?

- If the profile looks bad, the ingestion stops.

2. Defining "Expectations"

I’ve been exploring frameworks like Great Expectations (integrated via Spark) to set hard rules for our pipelines. In a "DevLog" mindset, we treat data quality like unit tests for code.

- Expectation:

column_Amust be a valid email format. - Expectation:

column_Bmust always be unique. If a batch of data fails these tests, it’s quarantined in an "Error Zone" rather than polluting the production Lakehouse.

3. The Quality-Governance Loop

This is where Apache Atlas comes back into play. When a quality check fails, we link that failure to the Data Lineage. This allows us to look at a broken report and say: "The error isn't in the report; it started three steps back in the ingestion from the CRM system." That visibility is a game-changer for troubleshooting.

The "Rizki" Take: Data Quality is an IT Responsibility

Early in my career, I thought data quality was for "Data Scientists" or "Business Analysts." I was wrong.

As IT Techs, we own the pipes. If the water coming out of the pipes is dirty, the plumber is the first person people call. By implementing automated quality checks and profiling within our infrastructure, we stop being reactive and start delivering Trusted Data as a service.